Natural Language Processing with transforemrs

Using a BERT (Bidirectional Encoder Representations from Transformers) base model

The goal of this project was to build a a sentiment classifier trained to predict movie reviews. I used Rotten Tomatoe's movie reviews dataset found here.

Most of the coding was done on PyCharm, however, training was done in Google Colab thanks to their faster GPU.

I used the BERT (Bidirectional Encoder Representations from Transformers) tokenizer for my dataset, and later a pre-trained BERT (bert-base-cased) base model. More about the pre-trained model I used can be found here.

After loading the data I needed to prepare it to create two input tensors: input ID's and Attention Mask. These were needed to complement the BERT base model used. Here you can read an interesting explanation about BERT and how to use it. TensorFlow Hub has multiple versions of BERT base pre-trained models you can use too.

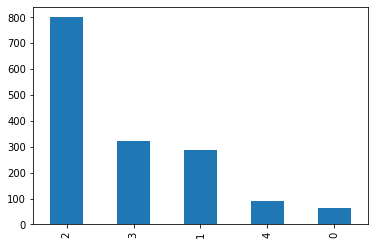

The sentiment labels in the Rotten Tomatoes dataset are the following:

- 0 - negative

- 1 - somewhat negative

- 2 - neutral

- 3 - somewhat positive

- 4 - positive

Additionally the distribution in the dataset was like this:

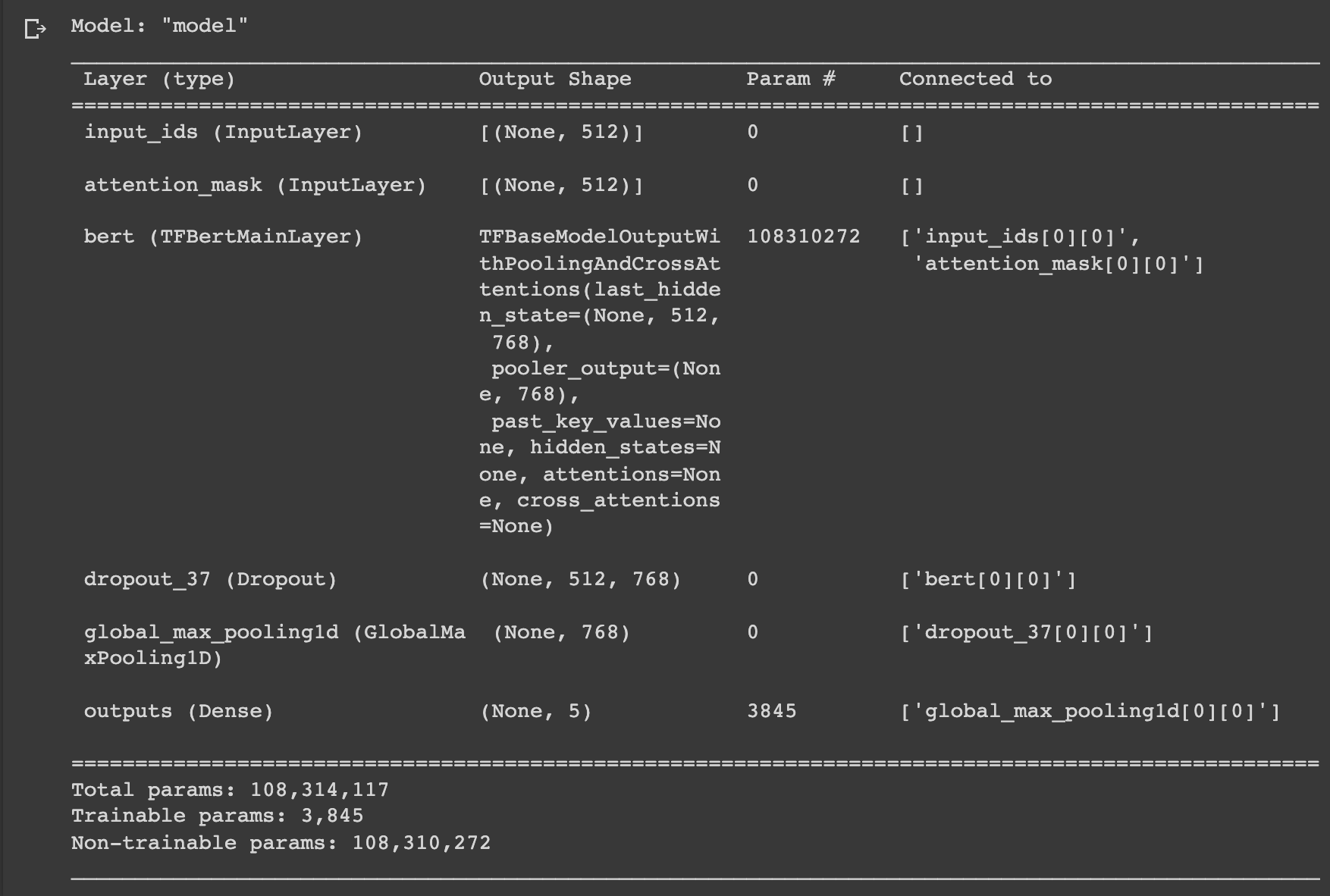

# Two inputs

input_ids = tf.keras.layers.Input(shape=(512,), name='input_ids', dtype='int64')

mask = tf.keras.layers.Input(shape=(512,), name='attention_mask', dtype='int64')

# Transformer

embeddings = bert.bert(input_ids, attention_mask=mask)[0]

# Classifier

x = tf.keras.layers.Dropout(0.1)(embeddings)

x = tf.keras.layers.GlobalMaxPool1D()(x)

y = tf.keras.layers.Dense(5, activation='softmax', name='outputs')(x)

# Initialize model

model = tf.keras.Model(inputs=[input_ids, mask], outputs=y)

# Freeze layer

model.layers[2].trainable = False

model.summary()

Once the model was fitted I saved it to later use it with multiple devices:

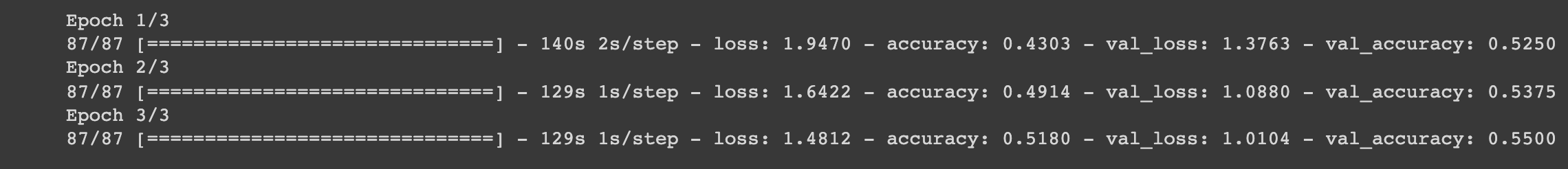

history = model.fit(

train_ds,

validation_data=val_ds,

epochs=3)

model.save('sentiment_model')As you can see the epochs and batches used are low, yielding in a rather low accuracy. Nevertheless, below you can observe the model in action predicting the different phrases and the result was better than expected: